Your Trustworthy IP Partner!

One Firm

Multiple Dimensions Services

You are here: Home > Information > Hot News < Back

Intellectual Property Analysis of Digital Contents and Works in the Background of AIGC (I)

View: 68 Date: 2024-03-29 06:37

Author: Wenwen MA

In November 2022, the stunning debut of ChatGPT set off a revolutionary storm in the field of AI. In the following year and more, how AI will disrupt our work and life has become the focus of heated discussion, and it is undoubtedly the hot topic at that time. ChatGPT, as an outstanding representative of generative AI technology, has a profound impact. From the perspective of the industrial chain, the upstream computing power and data form the solid foundation, the midstream gathers many large models. Before the emergence of universal large models, the development of AI models was often limited to specific application scenarios, and each small model needed to be trained independently, which was difficult to achieve reuse and knowledge accumulation, resulting in high thresholds and high costs for AI applications. However, the emergence of universal large models has completely changed this situation. By extracting common knowledge from massive, multi-scenario and multi-field data, it builds a model base with wide applicability and strong generalization ability, which greatly reduces the landing difficulty of AI applications. Therefore, downstream application layer products are springing up like mushrooms. However, with the rapid development of generative AI technology, a series of new legal issues have also emerged, such as open source issues, information security issues and intellectual property issues, which need to be further studied and solved.

Intellectual property is a huge field, covering patents, trademarks, copyrights and technical secrets and many other aspects, they run through all links of the entire industrial chain, which are important elements that cannot be ignored in the process of innovation. For a long time, making machines to have creative ability has been seen as a big challenge, and creativity is therefore considered to be the most essential difference between humans and machines. Early AI, which we call weak AI, was only capable of completing specific tasks or solving specific problems. However, with the rise of generative AI, although they have not yet reached the status of strong AI, they have been able to demonstrate some ability to learn, reason, plan and solve problems by learning large amounts of training data and automatically or helped to generate various kinds of content.

Content generated by AI comes in a variety of forms, including text, images, video, code and 3D content, all of which are protected by copyright law. But the question is whether AI-generated content can be protected by copyright. If so, who owns the copyright? The AI itself? The developers of AI? The users of AI? And what are the potential infringement risks AIGC faces? Is it possible for our original content to be infringed by AI? These issues have attracted a lot of attention and discussion in the industry, but there are still many ambiguities at the legal level. We will explore these issues in two separate articles.

The complexity of AIGC technology is often referred to as "dark box algorithm", which undoubtedly brings many difficulties to the exploration of legal boundaries. In short, AIGC mainly covers two core stages: the first is model building and training, and the second is the use of trained models to generate content.

The model, which is the cornerstone of AIGC, the accuracy is closely related to the amount and variety of training data. The training data comes from variety of sources, such as publicly available datasets, user-generated content, in-house and partner data, as well as through crowdsourcing and tagging services, and the purchase of third-party data. In particular, the public data, as the primary data source, is often obtained using crawler technology. Although crawler as a means of obtaining data is not prohibited by law, the use of crawler must comply with relevant agreements and ensure the legality of data. However, if it is used improperly, such as blocking the operation of the website due to high-frequency access in a short period of time, or for improper purposes such as stealing personal privacy and conducting unfair competition, it may face legal risks.

The right to copy as stipulated in China's copyright law refers to the right to make one or more copies of a work by printing, copying, rubbing, recording, video, copying, reproducing, digitization, etc., while crawler cannot identify the copyright problem of the captured content. If unauthorized crawling of copyrighted content is used for model training, the data needs to be downloaded to the local during the training process. This is likely to constitute a violation of the copyright of others. In practical application, users will also voluntarily "feed" data to AI to meet demand, which also poses the risk of using unauthorized content. Although China's copyright law provides exemptions for fair use, including "personal use" for personal study, research or appreciate; "appropriate citation" to introduce, comment on a work or illustrate a problem; As well as "scientific research" for teaching and scientific research needs, but the training scenarios of most existing AI models are not suitable for use.

In addition to the copyright Law, there are other laws and regulations in China also have requirements for data acquisition or model training process, such as the "Generative Artificial Intelligence Service Management Measures" officially implemented in August last year, Article 7 stipulates that providers should be responsible for the pre-training data and the legitimacy of the optimized training data source of generative AI products, the second item clearly stipulates that it does not contain content that infringes intellectual property rights. The anti-unfair competition law also requires the legality of data crawling.

As an AI entrepreneur or AI platform operator, in the construction and operation process, they must fully understand and consider these legal risks, ensure compliance operations, and avoid potential legal disputes.

From a global perspective, it is not uncommon to sue AI platform developers for copyright infringement. Take Stable Diffusion, a well-known image-generating AI platform, for example. The LAION-5B database on which its model is trained, despite its large size and free and open source, contains a large number of copyrighted works. The database crawls content from e-commerce platforms, video sites, news sites, and more, and the diversity of its data sources also brings potential copyright issues. Although LAION-5B doesn't store the images themselves directly, the training process requires downloading the works locally, which undoubtedly involves the copy of copyright.

In early 2023, well-known Image provider Getty Image filed a lawsuit against Stable Diffusion developer Stability AI, accusing it of using more than 12 million Getty Image photos to train an AI model without authorization. What's more, the photos produced by Stable Diffusion appears to be a distorted and blurred Getty Image watermark, which undoubtedly increases the suspicion of infringement. The case is still pending, and while Stability AI has tried to argue that the English courts have no jurisdiction, the court has pointed out inconsistencies in its testimony and regarded Getty Image's claims to be sufficiently substantiated to investigate further.

Image by Getty Images (left) AI-generated image (right)

Images are from the Internet and are used for communication only

There have also been several class-action lawsuits by artists in the US against generative AI services such as Stability AI, Midjourney and DeviantArt. However, due to the large number of images mentioned by these artists in the lawsuit, only a few of them applied for copyright, and the court ultimately failed to support their claims due to insufficient evidence.

From these two cases, it can be seen that when training data has intellectual property flaws, AI platform developers do face the risk of infringement. However, in the actual litigation process, factors such as the copyright status and quantity of the original work and the result of AI generation will have an important impact on the judgment result. Therefore, for the data that may be used as training AI models, original authors should take timely copyright protection measures to ensure that their rights and interests are not infringed.

In February, the Guangzhou Internet Court heard a case involving copyright infringement by an AI platform, which attracted wide attention. In the case, the plaintiff had the exclusive license and rights to protect the images of Ultraman series, while the defendant was a website providing generative AI services. The court found that when the user inputs relevant instructions through the Convert Text To Picture function on the defendant's website, the image of Ultraman generated is substantially similar to the image of Ultraman owned by the plaintiff, so it infringes the plaintiff's right to copy and modify the works involved. The decision caused a lot of controversy in the industry. In the AIGC industry chain, similar to the defendants in the above case, downstream application platform developers often buy midstream models directly, and do not directly participate in the model training process, so there is no copying behavior. If platform developers are able to provide a legitimate source for the model, the definition of liability becomes an issue worth discussing. In addition, the judgment says that platforms should exercise a reasonable duty of care, but how to define "duty of care" and whether this can be a basis for exoneration is also controversial. For midstream model manufacturers, whether they should also bear the duty of care, and whether they can be exempted after fulfilling the obligation, these issues also need to be further explored. This series of issues is not only related to the legal liability of AI platform developers, but also of great significance for the healthy development of the entire AIGC industry chain. Therefore, it is necessary for us to conduct in-depth research on these problems in order to seek more reasonable legal solutions.

In both the Getty Image case and the Altman case, the plaintiffs regard the content generated by the AI platform as the main basis of infringement. In the process of content generated by AI platform, users first input their own ideas and preset instructions, and then AI generates content according to these instructions, and users sift through them and finally get satisfactory works. Taking AI image generation as an example, the model will extract image features and rules based on previous training data, combine certain randomness to generate new images, and make them more in line with users' visual needs through optimization and adjustment. Although users input instructions, there are questions about how much creative content these instructions contain, because the generation of images still largely relies on the model's previous training data and algorithms. Therefore, there may be similarities between the AI-generated images and the training data, which in turn poses the risk of infringement.

Original image Generated by AI

Images are from the Internet and are used for communication only

Observing the two images, the left is the original and the right is generated by AI, they show a high visual similarity. In China's judicial practice, the judgment of copyright infringement is mainly based on the principle of "contact + substantial similarity". When judging whether the prior work has been contacted or there is a possibility of contact, it is usually considered whether the work has been published. However, in the case of non-open source models and opaque data sets, there are considerable difficulties in tracing and evidencing the source of the data set.

When determining substantial similarity, it is important to look at it from the perspective of the ordinary reader, listener or audience and determine whether there is a substantial similarity. At the same time, it should be made clear that copyright protects the form of expression, not the content of ideas. Therefore, the judgment of infringement should be limited to the formal expression of the work rather than the abstract idea or concept.

Not only is AI-generated content highly similar to the original due to simple modifications, as in the case of the two images mentioned earlier, but more often, AI is able to learn and imitate the unique style of a particular work. One might argue that copyrights do not protect style, and that mere stylistic similarity does not constitute infringement. However, this view needs to be judged on a case-by-case basis. These two images, for example, illustrate the complexity. Mondrian's work is known for its unique style of frame composition and color collocation. While AI-generated image is not exactly similar to Mondrian's original works in direct comparison, which may be more complex. However for those familiar with Mondrian's work, they can easily recognize traces of Mondrian's style in the AI-generated image, Including its unique expression, constituent elements and image effects. The similarities are enough to confuse the ordinary audience.

Mondrian's work Generated by AI

Images are from the Internet and are used for communication only

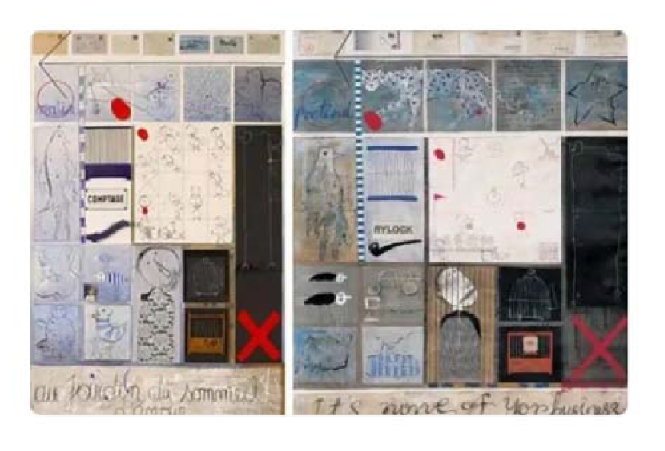

In addition, let's look at another striking case that may give us more insight. The plaintiff is Belgian painter Sylvain, whose paintings use squares as a background and incorporate graffiff-style iconic elements such as "birds, nests, cages, children, airplanes, animals" to form his own unique artistic style. The defendant, a domestic painter, "borrowed" from Sylvain's style and used similar iconic elements to create his own works. In the first instance judgment, the court found that 22 of the defendant's paintings were substantially similar as a whole to Sylvain's works, with 76 partial (combination of elements) substantial similarities and 84 single elements substantial similarities.

Left: Sylvain's work

Right: domestic painter's work

Images are from the Internet and are used for communication only

Through this case, we can conclude that the expression of art works is mainly reflected in the artistic modeling formed by the organic integration of aesthetic factors such as composition, line, color and form. In the minds of ordinary audience, style not only contains the content of thought, it also contains the elements of expression. Therefore, when judging whether AI-generated content constitutes infringement, we need to consider the overall style of the work, the way it is expressed, and the degree of confusion it may cause to the audience.